VMware vSphere HA differences between vSphere 4.x and vSphere 5.x

What is VMware vSphere HA?

VMware vSphere High Availability is the cluster level feature designed for providing cost effective high availability solution for Virtual machines running on the ESXi servers.

How it works?

As already said HA is a cluster level feature, which means we put multiple ESXi servers in to a cluster (the maximum servers we can put in to a cluster is 32) and Enable HA on that cluster. If a Esxi server in the cluster fails ( for reasons like ESXi OS crash, hardware failure on which ESXi is running), HA will automatically restart all the virtual machines running on the failed host to a healthy host in the cluster.

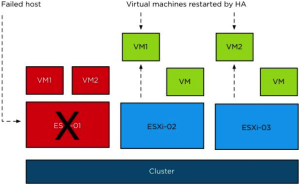

Below figure shows how HA will restart the virtual machines when failover happens in a HA enabled cluster

In the below figure we have 3 ESXi servers ( ESXi-01, ESXi-02, ESXI-03) and we have VM1 and VM2 running on ESXi-01 and ESXi-02 and ESXi-03 have one VM running on them. Once HA detects that the ESXi-01 has failed, the VM’s running on ESXi-01 are restarted on to the healthy servers ( ESXi-02 and ESXi-03) in the same cluster.

Below are the differences between VMware vSphere HA in vSphere 4.x and vSphere 5.x

Changes in VMware vSphere High Availability agent and its functionality

In vSphere 5.x, VMware introduced the new HA agent which is FDM (Fault Domain Manager). It has replaced the vSphere 4.x HA Agent AAM (Automated Availability Manager).

When we are enabling HA in vSphere 5.x, FDM agent will get initialized on all ESXi hosts paralelly where in vSphere 4.x AAM agent will gets installed in a serial fashion that is like one by one on all the ESXi hosts.

FDM is one of the most important agents on an ESXi host. Contrary to AAM, FDM uses a single-process agent. However, FDM spawns a watchdog process. In the unlikely event of an agent failure, the watchdog functionality will pick up on this and restart the agent to ensure HA functionality remains without anyone ever noticing it failed.

Primary/Secondary node concept has been removed

In vSphere 4.x, HA used the concept of primary and secondary nodes, when ESXi host is added to HA enabled cluster, the first 5 ESXI servers will be primary and the remaining will be named secondary servers. The primary nodes will be taking care of failures.

In vSphere 5.x, on HA enabled cluster, there will be one ESXi host which acts as a master and all remaining ESXi hosts are slaves. Only scenario when a HA cluster has 2 masters is when a network partition occurs.

HA DNS dependency has been removed

In vSphere 5.x, HA is no longer dependent on DNS as its works with IP addresses only, where as in vSphere 4.x, ESXi hosts should be joined into the domain, also the character limit for ESXi hostname has been lifted in vSphere 5.x. Prior to vSphere 5.x, FQDNs were limited to 26 characters.

Note: For best practices in vSphere 5.x, add/register the ESXi hosts with FQDN in vCenter server.

Changes in Log files

Another major change is the fact that the HA log files are part of the normal log functionality ESXi offer which means that you can find the log file in /var/log and it is picked up by syslog. It is called fdm.log

Change in Virtual Machine Protection

In vSphere 4.x, virtual machine protection was handled by the vpxd which notified AAM through vpxa called vmap. Where as in vSphere 5.x, virtual machine protection happens on several layers but it is ultimately the responsibility of vCenter

Changes in VM restart attempts

In vSphere 4.x, the max number of restart retries that can be attempted on a VM was 6. Where as in vSphere 5.x this has been changed and max restart retries has been limited to 5 including the initial restart attempt.

Changes in Isolation Response

When an Esxi host is isolated, HA looks at the isolation response setting and triggers whatever we configured (leave them powered on, Power off and shut down). In vSphere 5.x when a slave Esxi is isolated, HA(Master) will waits for 30 seconds before triggering the isolation response.

In vSphere 4.x, it was possible to configure this wait time using an advanced setting“das.failuredetectiontime”.Where as in vSphere 5.x it is no longer to configure the advanced setting. But if its required to change the isolation response time, we need to add the advanced setting “das.config.fdm.isolationPolicyDelaySec”.

Change in HA & DPM working together

In vSphere 4.x when you disable Admission Control on a DPM enabled cluster it could lead to a serious impact on availability. When Admission Control was disabled, DPM could place all hosts except for 1 in standby mode to reduce total power consumption. This could lead to issues in the event that if this single host would fail everything will be gone.

In vSphere 5.x, this behavior has changed: when DPM is enabled, HA will ensure that there are always at least two hosts powered up for failover purposes.

Datastore Heartbeating has been introduced in vSphere 5.x

In vSphere 4.x when a host is network isolated ( which means a physical cable pull or a network loss with the HA cluster) then the primary triggers the isolation response as the primary ndes does not receive any heartbeat from the isolated host. The problem here is the host is just isolated, but not dead. So to avoid this particular scenario, and to decrease the false positives, in 5.X VMware has introduced, datastore heartbeat, which isolates the hearbeats and kind of enables a cross check on the host.

Enhancement in HA Admission Control Policy

In vSphere 4.x, the maximum host failures that could be tolerated was 4 but In Vsphere 5.x, an enhancement was made in “Host Failures that cluster can Tolerates”Admission Control Policy to 31 hosts.

The Percentage based admission control policy allows you to specify percentages for both CPU and memory separately.

Hi,

This is the best article I’ve read ever on Vmware HA. Thank you so much for this great informative article.

I have one question also in my mind. Could you please elaborate what happened if a VM goes down, what action HA take to restart that machine on another host in cluster in 5.x.

Thanks And Regards

Harendra Kumar

If VM monitoring is configured in HA cluster, FDM agent will communicate with the VM’s by using VMware tools heart beat. So, if the VM goes down due to any reason, HA will attempt to reset on the same host(as there is no issue with the host).